http://oku.edu.mie-u.ac.jp/~okumura/compression/ar002/

I studied every bit of ar002 intensely, especially the Huffman related code. I was impressed and learned tons of things from ar002. It used a complex (to a teenager) tree-based algorithm to find and insert strings into a sliding dictionary, and I sensed there must be a better way because its compressor felt fairly slow. (How slow? We're talking low single digit kilobytes per second on a 12MHz machine if I remember correctly, or thousands of CPU cycles per input byte!)

So I started focusing on alternative match finding algorithms, with all experiments done in 8086 real mode assembly for performance because compilers at the time weren't very good. On these old CPU's a human assembly programmer could run circles around C compilers, and every cycle counted.

Having very few programming related books (let alone very expensive compression books!), I focused instead on whatever was available for free. This was stuff downloaded from BBS's, like the archiving tool PKZIP.EXE. PKZIP held a special place, because it was your gateway into this semi-secretive underground world of data distributed on BBS's by thousands of people. PKZIP's compressor internally used mysterious, patented algorithms that accomplished something almost magical (to me) with data. The very first program you needed to download to do much of anything was PKZIP.EXE. To me, data compression was a key technology in this world.

Without PKZIP you couldn't do anything with the archives you downloaded, they were just gibberish. After downloading an archive on your system (which took forever using Z-Modem), you would manually unzip it somewhere and play around with whatever awesome goodies were inside the archive.

Exploring the early data communication networks at 1200 or 2400 baud was actually crazy fun, and this tool and a good terminal program was your gateway into this world. There were other archivers like LHA and ARJ, but PKZIP was king because it had the best practical compression ratio for the time, it was very fast compared to anything else, and it was the most popular.

This command line tool advertised awesomeness. The help text screamed "FAST!". So of course I became obsessed with cracking this tool's secrets. I wanted to deeply understand how it worked and make my own version that integrated everything I had learned through my early all-assembly compression experiments and studying ar002 (and little bits of LHA, but ar002's source was much more readable).

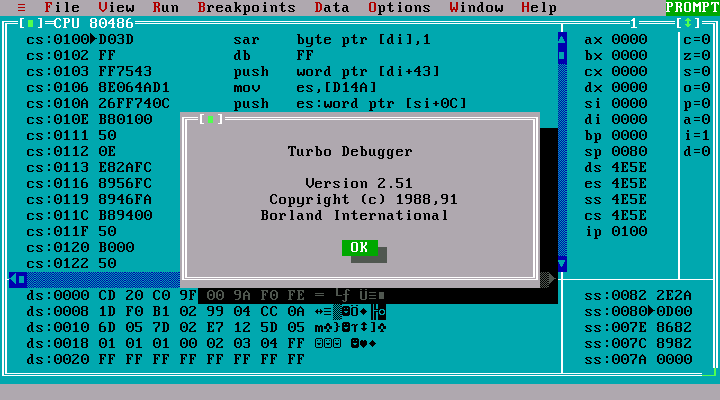

I used my favorite debugger of all time, Borland's Turbo Debugger, to single step through PKZIP:

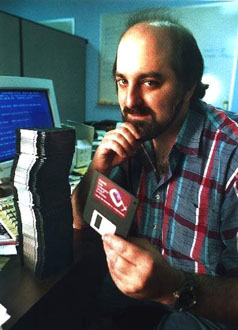

PKZIP was written by Phil Katz, who was a tortured genius in my book. In my opinion his work at the time is under appreciated. His Deflate format was obviously very good for its time to have survived all the way into the internet era.

I single stepped through all the compression and decompression routines Phil wrote in this program. It was a mix of C and assembly. PKZIP came with APPNOTE.TXT, which described the datastream format at a high level. Unfortunately, at the time it lacked some key information about how to decode each block's Huffman codelengths (which were themselves Huffman coded!), so you had to use reverse engineering techniques to figure out the rest. Also most importantly, it only covered the raw compressed datastream format, so the algorithms were up to you to figure out.

The most important thing I learned while studying Phil's code: the entire sliding dictionary was literally moved down in memory every ~4KB of data to compress. (Approximately 4KB - it's been a long time.) I couldn't believe he didn't use a ring buffer approach to eliminate all this data movement. This little realization was key to me: PKZIP spent many CPU cycles just moving memory around!

PKZIP's match finder, dictionary updater (which used a simple rolling hash), and linked list updater functions were all written in assembly and called from higher-level C code. The assembly code was okay, but as a demoscener I knew it could be improved (or at least equaled) with tighter code and some changes to the match finder and dictionary updating algorithms.

Phil's core loops would use 32-bit instructions on 386 CPU's, but strangely he turned off/on interrupts constantly around the code sequences using 32-bit instructions. I'm guessing he was trying to work around interrupt handlers or TSR's that borked the high word in 32-bit registers.

To fully reverse engineer the format, I had to feed in very tiny files into PKZIP's decompressor and single step through the code near the beginning of blocks. If you paid very close attention, you could build a mental model of what the assembly code was doing relative to the datastream format described in APPNOTE.TXT. I remember doing it, and it was slow, difficult work (in an unheated attic on top of things).

I wrote my own 100% compatible codec in all-assembly using what I thought were better algorithms, and it was more or less competitive against PKZIP. Compression ratio wise, it was very close to Phil's code. I started talking about compression and PKZIP on a Fidonet board somewhere, and this led to the code being sublicensed for use in EllTech Development's "Compression Plus" compression/archiving middleware product.

For fun, here's the ancient real-mode assembly code to my final Deflate compatible compressor. Somewhere at the core of my brain there is still a biologically-based x86-compatible assembly code optimizer. Here's the core dictionary updating code, which scanned through new input data and updated its hash-based search accelerator:

;--------------- HASH LOOP

ALIGN 4

HDLoopB:

ReptCount = 0

REPT 16

Mov dx, [bp+2]

Shl bx, cl

And bh, ch

Xor bl, dl

Add bx, bx

Mov ax, bp

XChg ax, [bx+si]

Mov es:[di+ReptCount], ax

Inc bp

Shl bx, cl

And bh, ch

Xor bl, dh

Add bx, bx

Mov ax, bp

XChg ax, [bx+si]

Mov es:[di+ReptCount+2], ax

Inc bp

ReptCount = ReptCount + 4

ENDM

Add di, ReptCount

Dec [HDCounter]

Jz HDOdd

Jmp HDLoopB

As an exercise while learning C I ported the core hash-based LZ algorithms I used from all-assembly. I uploaded two variants to local BBS's as "prog1.c" and "prog2.c". These little educational compression programs were referenced in the paper "A New Approach to Dictionary-Based Lossless Compression" (2004), and the code is still floating around on the web.

I rewrote my PKZIP-compatible codec in C, using similar techniques, and this code was later purchased by Microsoft (when they bought Ensemble Studios). It was used by Age of Empires 1/2, which sold tens of millions of copies (back when games shipped in boxes this was a big deal). I then optimized this code to scream on the Xbox 360 CPU, and this variant shipped on Halo Wars, Halo 3, and Forza 2. So if you've played any of these games, somewhere in there you where running a very distant variant of the above ancient assembly code.

Eventually the Deflate compressed bitstream format created by Phil Katz made its way into an internet standard, RFC 1951.

A few years ago, moonlighting while at Valve, I wrote an entirely new Deflate compatible codec called "miniz" but this time with a zlib compatible API. It lives in a single source code file, and the entire stream-capable decompressor lives in a single C function. I first wrote it in C++, then quickly realized this wasn't so hot of an idea (after feedback from the community) so I ported it to pure C. It's now on Github here. In its lowest compression mode miniz also sports a very fast real-time compressor. miniz's single function decompression function has been successfully compiled and executed on very old processors, like the 65xxx series.

Conclusion

I don't think it's well known that the work of compression experts and experimenters in Japan significantly influenced early compression programmers in the US. There's a very interesting early history of Japanese data compression work on Haruhiko Okumura's site here. Back then Japanese and American compression researchers would communicate and share knowledge:

"At one time a programmer for PK and I were in close contact. We exchanged a lot of ideas. No wonder PKZIP and LHA are so similar."I'm guessing he's referring to Phil Katz? (Who else could it have been?)

Finally, I personally learned a lot from studying the code written by these inspirational early data compression programmers. I'll never get to meet Phil Katz, but perhaps one day I could meet Dr. Okumura and say thanks.

Demoscener, in what group(s)?

ReplyDeleteRenaissance, started by Tomas Pytel (who eventually was one of the founders of Paypal). There used to be a Wikipedia page, now all I can find is this:

Deletehttps://en.wikipedia.org/wiki/Zone_66

Nice. Are there many ex-sceners in game biz? It's cool that some notable game companies started from demogroupers (mostly in Europe I think, maybe because it was a larger demogroup hub?) but I thought there should be more.

DeleteThe first big games from ex-sceners came out in the late 90s, I believe (though there were nice smaller games also earlier in the 90s). I always found it a bit surprising that the guys who "started 3D" were the likes of id and Epic, with their seemingly humble beginnings (especially Epic).

I'm still waiting for Triton to release Into the Shadows. :)

Oh, BTW. PayPal... pfft. PMODE!

DeleteThere are heaps of demosceners in the games industry! The members of Triton formed Starbreeze after they did Into the Shadows. DICE was ex-The Silents guys. There are sceners at many companies around the world now!

ReplyDelete